I know a lot of enterprise cloud customers have been watching the recent incident with Google Cloud (GCP) and UniSuper. For those of you who haven’t seen it: UniSuper is an Australian pension fund firm which had their services hosted on Google Cloud. For some weird reason, their private cloud project was completely deleted. Google’s postmortem of the project is here: https://cloud.google.com/blog/products/infrastructure/details-of-google-cloud-gcve-incident . Fascinating reading – in particular what surprises me is that GCP takes full blame for the incident. There must be some very interesting calls occurring with Google and their other enterprise customers.

There’s some fascinating morsels to consider in Google’s postmortem of the incident. Consider this passage:

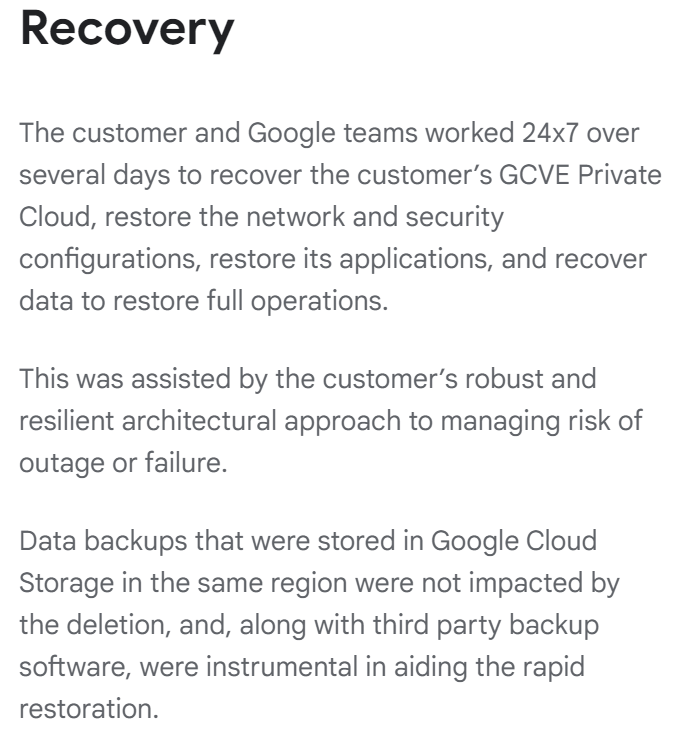

Data backups that were stored in Google Cloud Storage in the same region were not impacted by the deletion, and, along with third party backup software, were instrumental in aiding the rapid restoration.

https://cloud.google.com/blog/products/infrastructure/details-of-google-cloud-gcve-incident

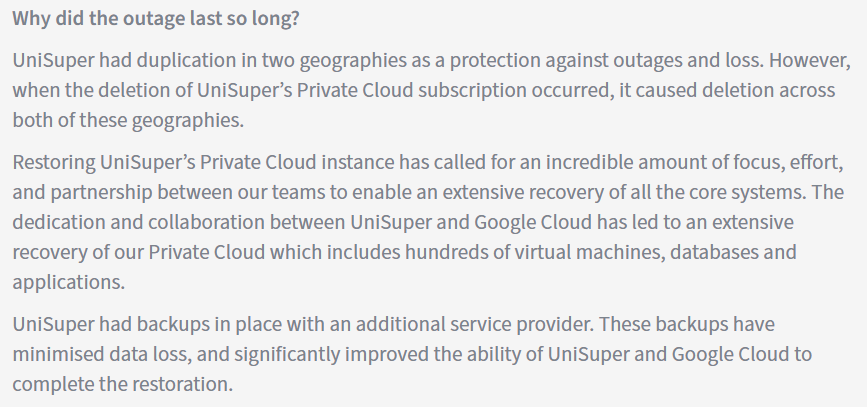

Fortunately for UniSuper, the data in Google Cloud Storage didn’t seem to be affected and they were able to restore from there. But it looks like UniSuper also had a another set of data stored with another cloud. The following is from UniSuper’s explanation of the event at: https://www.unisuper.com.au/contact-us/outage-update .

UniSuper had backups in place with an additional service provider. These backups have minimised data loss, and significantly improved the ability of UniSuper and Google Cloud to complete the restoration.

https://www.unisuper.com.au/contact-us/outage-update

Having a full set of backups with another service provider has to be terrifically expensive. I’d be curious to see a discussion of who the additional service provider is and a discussion of the costs. I also wonder if the backup cloud is live-synced with the GCP servers or if there’s a daily/weekly sync of the data to help reduce costs.

The GCP statement seems to say that the restoration was completed with just the data from Google Cloud Storage, while the UniSuper statement is a bit more ambiguous – you could read the statement as either (1) the offsite data was used to complete the restoration or (2) the offsite data was useful but not vital to the restoration effort.

Interestingly, a HN comment indicates that the Australian financial regulator requires this multi-cloud strategy: https://www.infoq.com/news/2024/05/google-cloud-unisuper-outage/ .

I did a quick dive to figure out where these requirements are coming from, and from the best that I could tell, these requirements come from the APRA’s Prudential Standard CPS 230 – Operational Risk Management document. Here’s some interesting lines from there:

Australian Prudential Regulation Authority (APRA) – Prudential Standard CPS 230 Operational Risk Management

- An APRA-regulated entity must, to the extent practicable, prevent disruption to

critical operations, adapt processes and systems to continue to operate within

tolerance levels in the event of a disruption and return to normal operations

promptly once a disruption is over.- An APRA-regulated entity must not rely on a service provider unless it can ensure that in doing so it can continue to meet its prudential obligations in full and effectively manage the associated risks.

I think the “rely on a service provider” is the most interesting text here. I wonder if – by keeping a set of data on another cloud provider – UniSuper can justify to the APRA that it’s not relying on any single cloud provider but instead has diversified its risks.

I couldn’t find any discussion about the maximum amount of downtime allowed, so I’m not sure where the “4 week” tolerance from the HN comment came from. Most likely that is from industry norms. But I did find some text about tolerance levels of disruptive events:

Australian Prudential Regulation Authority (APRA) – Prudential Standard CPS 230 Operational Risk Management

- 38. For each critical operation, an APRA-regulated entity must establish tolerance levels for:

(a) the maximum period of time the entity would tolerate a disruption to the

operation

It’s definitely interesting to see how requirements for enterprise cloud customers grow from their regulators and other interested parties. There’s often some justification underlying every decision (such as duplicating data across clouds) no matter how strange it seems at first.

APRA History On The Cloud

While digging into this subject, I found it quite interesting to trace how the APRA changed its tune about cloud computing over the years. As recently as 2010, the APRA felt the need to, “emphasise the need for proper risk and governance processes for all outsourcing and offshoring arrangements.” Here’s an interesting excerpt from their 2010 letter sent to all APRA-overseen financial companies:

Although the use of cloud computing is not yet widespread in the financial services industry, several APRA-regulated institutions are considering, or already utilising, selected cloud computing based services. Examples of such services include mail (and instant messaging), scheduling (calendar), collaboration (including workflow) applications and CRM solutions. While these applications may seem innocuous, the reality is that they may form an integral part of an institution’s core business processes, including both approval and decision-making, and can be material and critical to the ongoing operations of the institution.

https://www.apra.gov.au/sites/default/files/Letter-on-outsourcing-and-offshoring-ADI-GI-LI-FINAL.pdf

APRA has noted that its regulated institutions do not always recognise the significance of cloud computing initiatives and fail to acknowledge the outsourcing and/or offshoring elements in them. As a consequence, the initiatives are not being subjected to the usual rigour of existing outsourcing and risk management frameworks, and the board and senior management are not fully informed and engaged.

While the letter itself seems rather innocuous, it seems to have had a bit of a chilling effect on Australian banks: this article comments that, “no customers in the finance or government sector were willing to speak on the record for fear of drawing undue attention by regulators“.

An APRA document published on July 6, 2015 seems to be even more critical of the cloud. Here’s a very interesting quote from page 6:

In light of weaknesses in arrangements observed by APRA, it is not readily evident that risk management and mitigation techniques for public cloud arrangements have reached a level of maturity commensurate with usages having an extreme impact if disrupted. Extreme impacts can be financial and/or reputational, potentially threatening the ongoing ability of the APRA-regulated entity to meet its obligations.

https://www.apra.gov.au/sites/default/files/information-paper-outsourcing-involving-shared-computing-services_0.pdf

Then just three years later, the APRA seems to be much more friendly to cloud computing. A ComputerWorld article entitled “Banking regulator warms to cloud computing” published on September 24, 2018 quotes the APRA chair as acknowledging, “advancements in the safety and security in using the cloud, as well as the increased appetite for doing so, especially among new and aspiring entities that want to take a cloud-first approach to data storage and management.”

It’s curious to see the evolution of how organizations consider the cloud. I think UniSuper/GCP’s quick restoration of their cloud projects will result in a much more friendly environment toward the cloud.