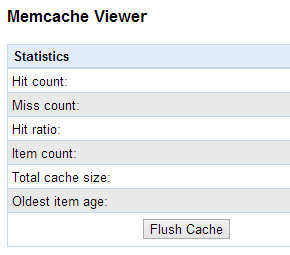

Recently there was a discussion in the App Engine forums about memcache calls that were hanging; in one instance, a memcache async put call was taking 2 hours to complete!

This was a particularly interesting issue, and I’d like to share a number of thoughts I had while solving it:

App Engine has a number of internal rate limiting/throttling controls on services. Moving large quantities of data around can quickly cause an application to hit these limits. In fact, I suspect that this was the actual problem – the original poster’s application was storing multiple megabytes of data into memcache in multiple asynchronous calls that occurred simultaneously; this design could easily be hitting a number of different rate limits. My suggestion for solving this problem (which ultimately worked) was to add a short delay after each memcache put call and to split the data amongst an increased number of memcache put calls. The reasons for which I suggested this fix are numerous:

- Adding a short delay after each memcache put call buys time for App Engine’s rate limit to reset; it prevents App Engine from thinking that the application is malfunctioning or attempting to overwhelm the memcache pipeline.

- Delays are easy to implement – in Python it’s one call to time.sleep(number of seconds to delay)and in Java it’s a simple call to Thread.sleep(number of seconds to delay). Note that in Java, you have to catch the potential InterruptedException. The Go call is similar to Python: call time.Sleep(delay duration). In PHP a delay is even simpler than in all of the above languages: all you need to do is call sleep(delay seconds).

- Increasing the number of memcache put calls means that a smaller amount of data is being stored for each memcache put. This contributes to point 1: preventing the pipeline to memcache from being overwhelmed with data.

- The delay doesn’t need to be long: two to five seconds is more than enough. In some cases, even a one second delay is enough to work.

Fortunately, the above fix worked in this case. But if it had not, I was prepared with a number of other possible fixes. For instance, I would have suggested the use of the task queue: split the data among multiple tasks, and then have each task store their data into memcache. Since each task would constitute a separate request and may be split amongst multiple instances, there’s less of a chance for any rate limiting to kick in. If that option wasn’t palatable for any reason, then another option would be to switch to dedicated memcache; it seems to be much more forgiving in regards to usage.

If none of the above options had worked, I would have suggested dumping memcache entirely and writing to the datastore/Cloud SQL. While memcache is a terrific service, it is not reliable – persisting the data through alternative sources is a much better way to manage large quantities of information.

The short version of this post: hanging or slow memcache calls can be fixed by inserting delays after each call and decreasing the amount of data handled in each memcache call.